Technology

Technology

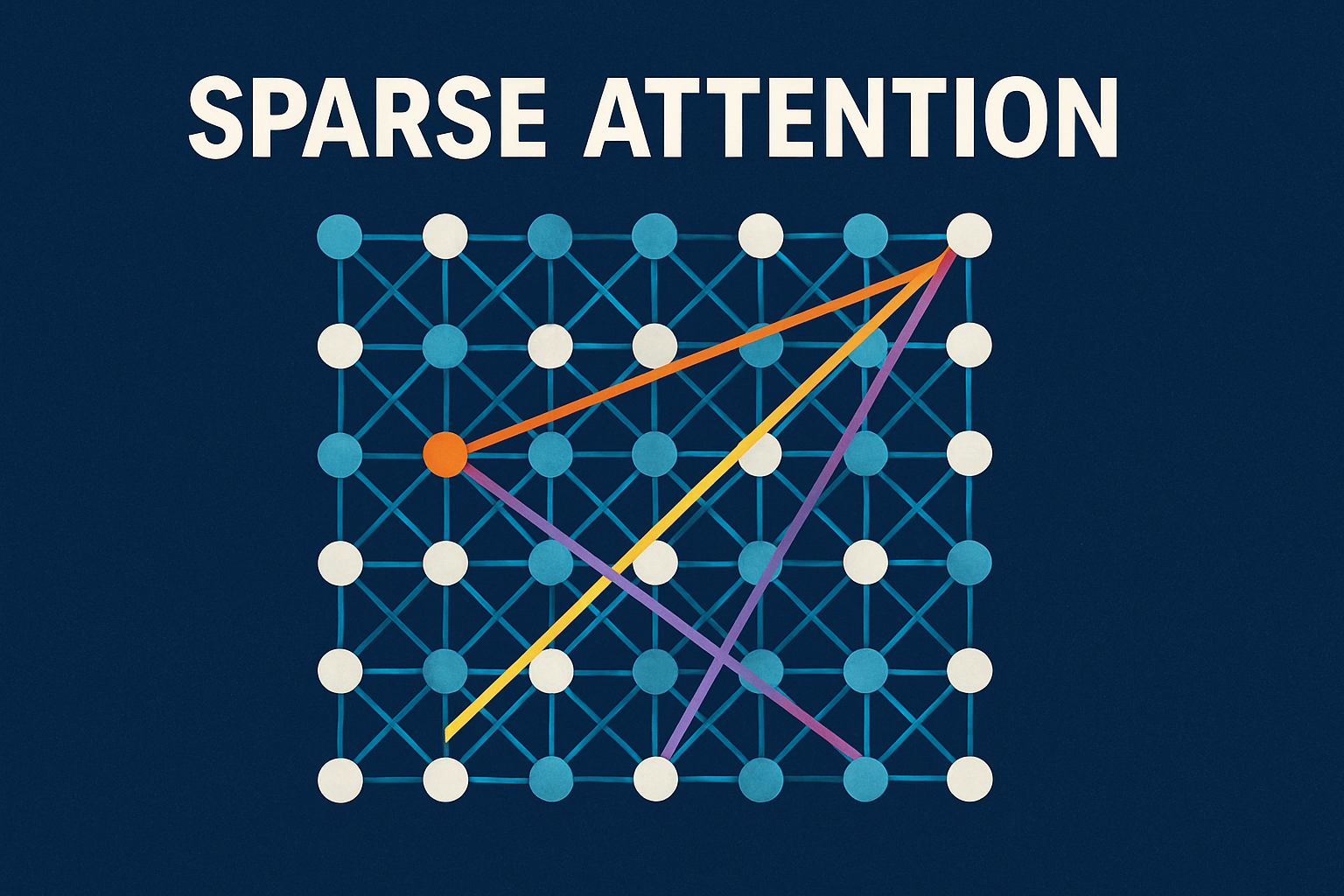

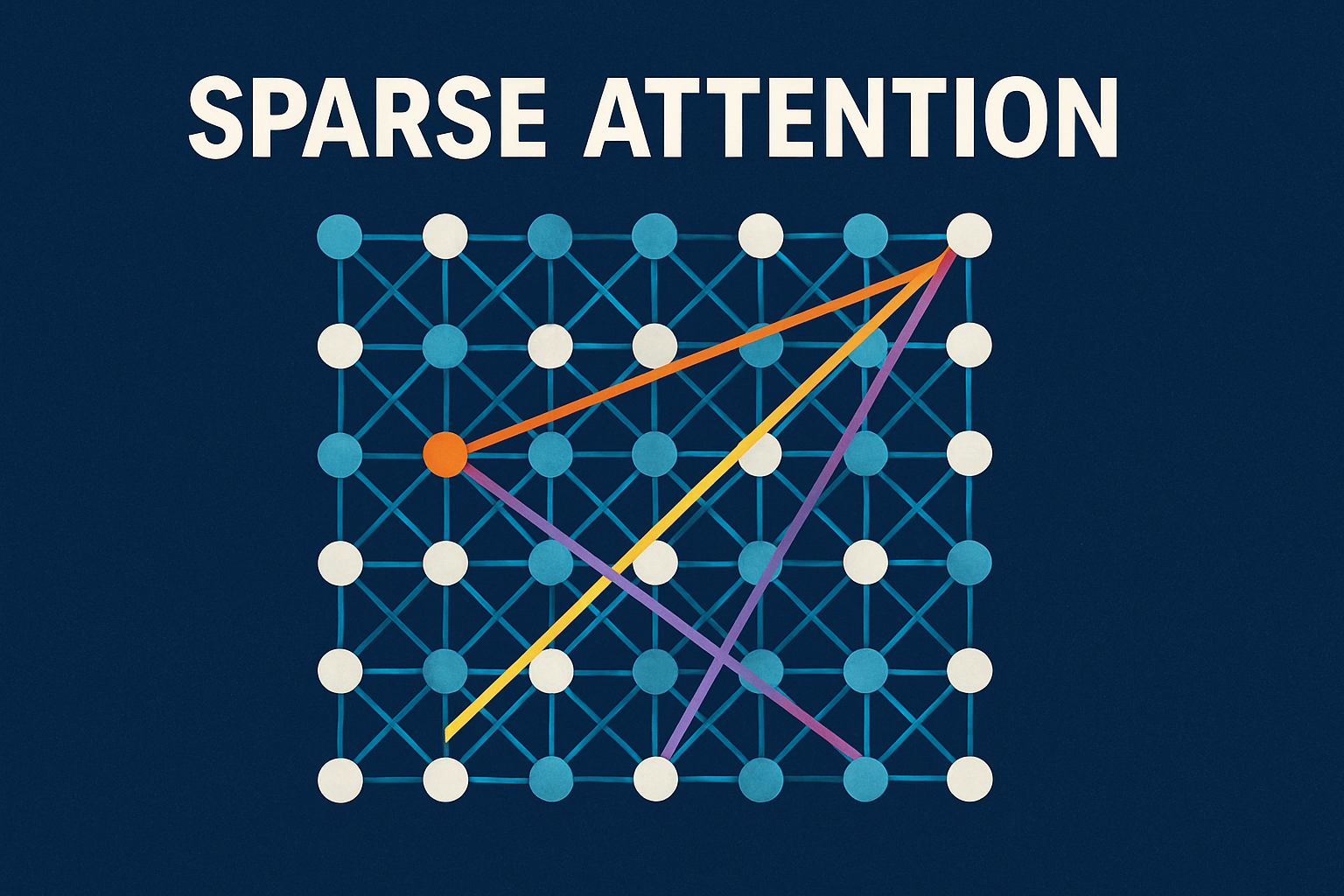

Sparse Attention

Sparse attention means the model focuses only on a small, important subset of tokens instead of attending to all tokens, reducing computation and memory usage …

Read MoreInsights, tutorials, and updates from the Pynatic team. Stay ahead with the latest in technology, AI, and software development.

Technology

Technology

Sparse attention means the model focuses only on a small, important subset of tokens instead of attending to all tokens, reducing computation and memory usage …

Read More Technology

Technology

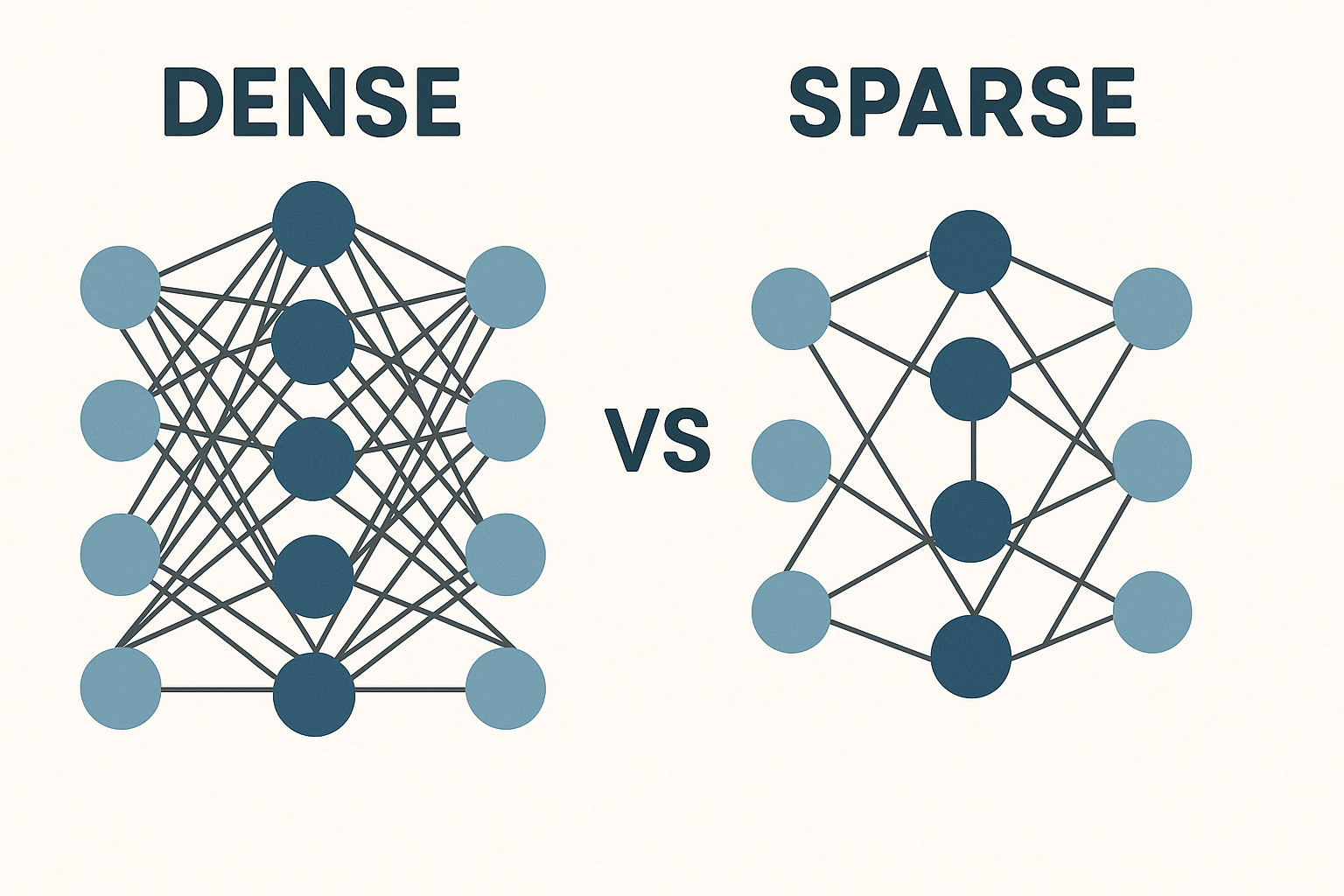

A dense neural network connects every neuron to the next layer, using more parameters and computation, while a sparse neural network has fewer connections, making …

Read More